The main goal of this lab is to give the student experience in the measurement and interpretation of spectral reflectance of various Earth surfaces with satellite images. This lab also teaches the student how to collect spectral signatures, graph them and perform analysis to determine if they pass the spectral separability test. This type of analysis is a prerequisite for image classification.

Methods:

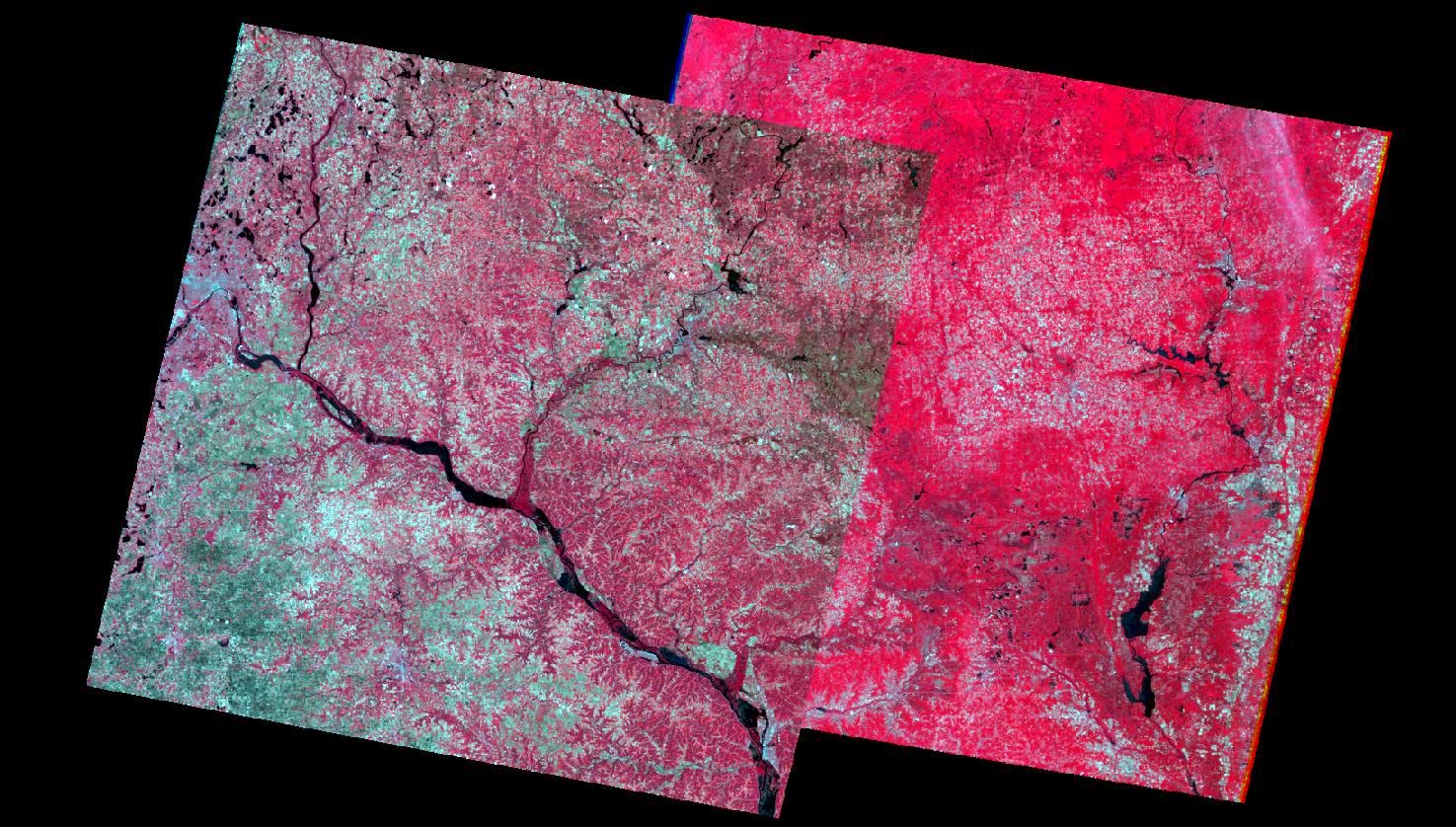

This lab will have the student plot the spectral reflectance of twelve different earth surfaces. These surfaces include standing water, moving water, vegetation, riparian vegetation, crops, urban grass, dry soil (uncultivated), moist soil (uncultivated), rock, asphalt highway, airport runway and a concrete surface.

Once each feature had been identified, a small polygon was then digitized around each feature in Erdas Imagine. Once the polygon was created, the raster processing tools were then activated, this enabled the signature editor window to be opened. The signature editor window lets the user name each digitized feature as well as open the mean plot window. This plot allows for the student to identify which bands have the greatest and least reflectance for each feature.

Results:

The figures below are the results of spectral analysis performed on all twelve features.

|

| Figure 1. This is the mean plot window for the airport runway feature. |

|

| Figure 2. This is the mean plot window for the asphalt highway feature. |

|

| Figure 3. This is the mean plot window for the concrete surface feature. |

|

| Figure 4. This is the mean plot window for the crops feature. |

|

| Figure 5. This is the mean plot window for the dry soil feature. |

|

| Figure 6. This is the mean plot window for the moist soil feature. |

|

| Figure 7. This is the mean plot window for the moving water feature. |

|

| Figure 8. This is the mean plot window for the riparian vegetation feature. |

|

| Figure 9. This is the mean plot window for the rock feature. |

|

| Figure 10. This is the mean plot window for the standing water feature. |

|

| Figure 11. This is the mean plot window for the urban grass feature. |

|

| Figure 12. This is the mean plot window for the vegetation feature. |